What is it?

PCI Express stands for Peripheral Component Interconnect Express is a type of interconnect that was developed by PCI-SIG (PCI Special interest Group). PCI-SIG is a group of more than 900 companies, that maintain and define the PCI specification.

PCIe is an interface that is used to connect modern devices and expansion cards inside your PC, workstation, or server to the motherboard chipset or the processor. It has completely replaced legacy interfaces such as PCI, PCI-X & AGP. The legacy PCI and PCI-X slots were used for regular expansion cards such as network interface cards and disk controllers, whilst AGP was designed to support graphics cards only.

PCIe features many improvements over previous standards such as lower pin-count, higher bus throughput, great performance scaling for bus devices, detailed error detection and reporting as well as hot-swap functionality in some implementations. It can support bi-directional traffic and not only carry the data but also power.

PCI Express is often shortened to PCIe, PCI-e, PCI-E. In this blog, we will be referring to it as PCIe which we believe is the most correct contraction.

What devices use PCI-E?

The most important devices and interfaces inside a computer are connected via a PCIe bus. To name a few, expansion cards such as GPUs, RAID cards, sound cards, and even SSD’s (specifically NVMe) use PCIe. Also, several essential on-board devices such as network, storage, and USB controllers communicate with the chipset and the CPU via PCIe.

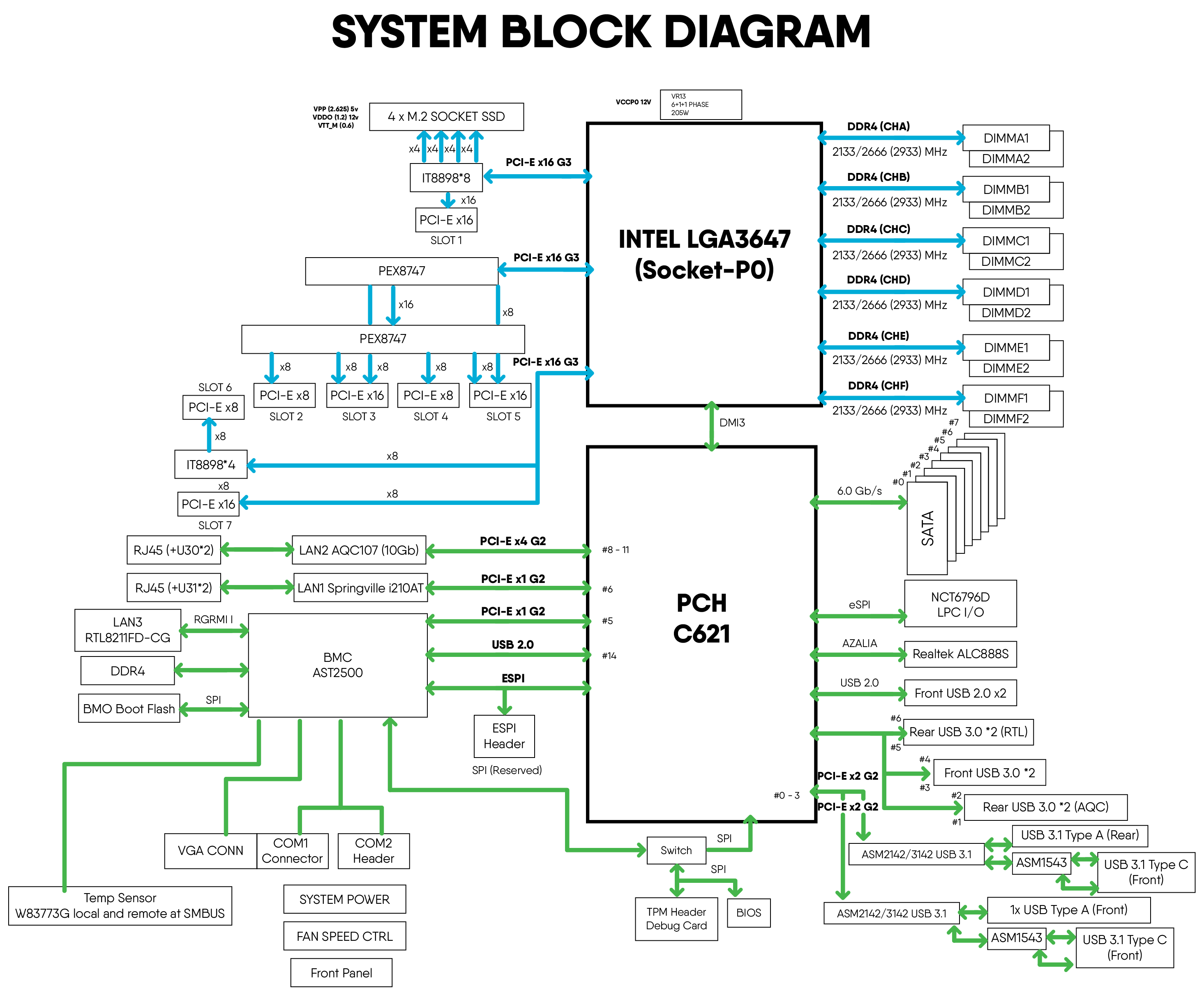

Pic: An example of the Supermicro motherboard block diagram shows how the devices and PCIe expansion slots are connected with the chipset and the CPU

PCI-E 3.0, PCI-E 4.0? What is the difference between the generations?

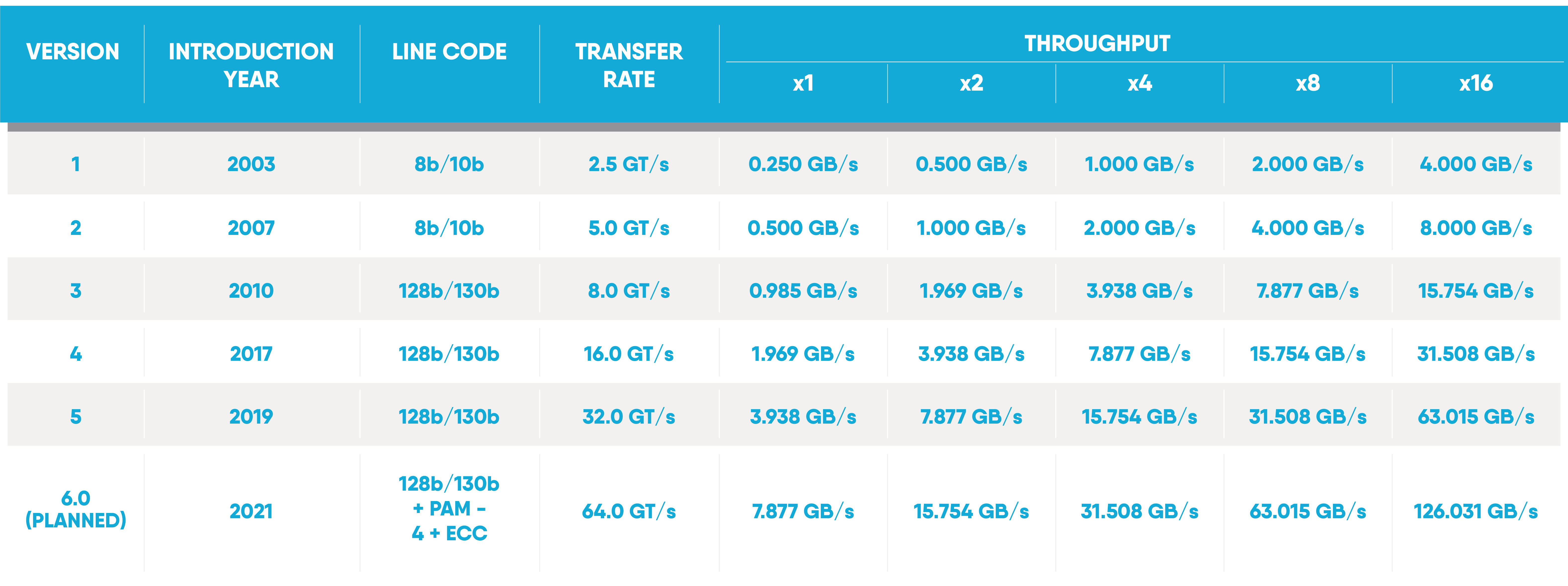

Modern PCs, servers, and workstations are currently using either 3rd (PCIe 3.0) or 4th (PCIe 4.0) generation of PCI Express. Let’s look at the inception of PCIe to see how this standard has evolved.

PCIe 1.0

Introduced in 2003, this featured many improvements over AGP or PCI-X such as much higher bus throughput, lower pin-count, the smaller size of the physical port, better error detection and reporting, and better performance scaling for bus devices. It was able to achieve 2.5GT/s (Giga Transfers per second) of transfer rate, and up to 250MB/s throughput per lane. One of the first devices types that utilised this standard was graphics adapters because the AGP interface they had been using limited their memory performance quite significantly.

PCIe 2.0

This version was introduced in 2007 and provided further improvements to the PCIe standard. The most noticeable improvement was the doubling of transfer rates and throughput versus that of PCIe 1.0 to 5GT/s and 500MB respectively per lane. The first motherboard chipsets that took advantage of PCIe 2.0 were Intel x38 and AMD 7000 series.

PCIe 3.0

The spec of this standard was finalised in 2010 and came with further speed improvements of 8GT/s and 985MB/s of throughput per lane as well as optimisations for enhanced signaling and data integrity, PLL improvements, clock data recovery, and channel enhancements.

PCIe 4.0

It was officially announced in 2017 and initially supported IBM’s Power9 CPU in systems such as the AC922 and later in AMD Zen 2 based platforms. It further doubled the speed of PCIe 3.0, with 16GT/s and 1968MB/s of throughput per lane.

PCIe 5.0 & 6.0

Both specs have been finalised by PCI-SIG and are expected to further double the transfer rates and bandwidth over the previous generation. The first platforms expected to feature PCIe 5.0 will be Intel’s Sapphire Rapids and AMD’s Zen 4.

Additionally, there were a few minor versions of PCIe along the way, such as 2.1 and 3.1, that brought some minor improvements to the design. If you are interested to find out more details about them, we would recommend checking the PCI-SIG website.

Controllers and physical interconnects

In the past, PCIe buses were controlled by the motherboard chipset which was in turn connected to the CPU via a proprietary link. So, ultimately, all the PCIe lanes came from the chipset, however this solution had several bottlenecks and latency issues. In modern systems, the PCIe controller is built-in to the CPU so most of the PCIe connectivity comes from the CPU socket(s) which has improved the communication between the CPU and PCIe devices greatly.

Each CPU PCIe controller comes with a limited number of supported PCIe lanes; the AMD EPYC 7713P, for example, comes with 128 PCIe 4.0 lanes. Some of those lanes are used by devices that are built into the motherboard such as network and storage controllers. The remaining ones are then split amongst the different expansion slots laid down on the motherboard.

The most common physical interconnects are card expansion slots that can be found on every computer motherboard. Those slots come in a variety of physical sizes representing a specific number of PCIe lanes that are connected to them. Each slot is usually labelled with the supported generation and the number of lanes, indicating the capability of the slot, such as “PCIe 4.0 x16” which means the slot can support 16 lanes PCIe 4.0.

Common PCIe expansion slots come in x1, x4, x8, and x16 physical configurations. They are upwards and downwards compatible which means that for example, you can plug in a sound card with a physical x1 interface to an x16 slot on the motherboard. The card will work and will get power and the required PCIe x1 bandwidth from the physical x16 slot; the remaining 15 PCIe lanes of this slot will be unconnected and unused.

This principle can work the other way round, assuming certain conditions are met. You could, in theory, connect a PCIe x16 device (such as a graphics card) into an x1 slot so long as that slot is ‘open-ended’, making it physically compatible or a suitable adapter or riser is employed to adapt the physical slot. Either way, the x16 device would work but with reduced PCIe bandwidth, being limited to the x1 connection.

Pic: PCIe physical slot sizes

Pic: The latest NVIDIA A100 GPU is using PCIe 4.0 x16 interface

There are also other PCIe interfaces that can be found on the motherboards that are becoming more popular. Here are a few that you will be able to find on Supermicro motherboards:

M.2 also known as NGFF (Next Generation Form Factor) is used for directly mounted expansion cards and comes with up to 4 PCIe lanes. M.2 devices are installed directly into the dedicated slot on the motherboard. They have been commonly used for the fast NVMe SSD boot drives (in servers and workstations) and network modules (WIFI, BT, 5G) in laptops and small form factor systems.

Mini SAS HD (SFF-8643) connections usually represent 4 PCIe lanes per connector and are designed to connect enterprise or datacentre grade U.2 NVMe SSD storage drives to the host system, typically via a storage backplane.

Pic: Supermicro X11SCA motherboard comes with several PCIe expansion options that include different sizes of PCIe slots as well as M.2 and U.2

OCuLink (SFF-8611) is a cable interface that has largely replaced SFF-8643 for PCIe NVMe SSD’s. It has a smaller form factor and comes in 4 and 8 lane variants. The first version was only supporting PCIe 3.0, the 2nd version called OCuLink 2 is capable of supporting PCIe 4.0. It can be used to support PCIe devices as well as SATA and can be used to connect internal storage devices as well as external PCIe I/O expansion or external PCIe attached storage.

Pic: OCuLink cables are being used to connect twenty PCIe 3.0 NVMe SSD’s in Supermicro SYS-1029UZ-TN20R25M 1U server

Slim SAS or Slimline SAS is the next generation of ultra-high-speed interconnect that can carry PCIe 4.0 communications through the cable. It comes in two different sizes to provide 4 and 8 lane options and is used to connect PCIe risers or extender boards as well as PCIe NVMe storage devices. It is compliant with T10/Serial Attached SCSI (SAS-4) standard and offers superior signal integrity performance over MiniSAS solutions.

Pic: SlimSAS x8 cables are being used to connect the front backplane that supports PCIe 4.0 NVMe SSD’s inside the Supermicro SYS-740GP-TNRT

Compatibility

The PCIe standard is backward and forward compatible. The physical expansion slot sizes have remained unchanged since the 1st generation, only the electrical aspects of them have been updated. This means that it is possible to use devices designed for older generations of PCIe in newer generation PCIe slots and vice versa. However, using a later generation PCIe device in an older generation slot can result in performance degradation due to lower bandwidth from the older generation bus.

This means that that for example if you use a dual port 100GbE Mellanox PCIe 4.0 x16 network card in an older generation PCIe 3.0 x16 slot, it will function but would not be able to achieve its peak bandwidth of 100GbE per port when using both ports at the same time. To back this up with some quick maths, 100Gb/s equals 12.5GB/s, which means that two ports would need 25GB/s of bandwidth. The theoretical speed of PCIe 3.0 is 985MB/s per lane, when using all 16 lanes the card will have ~15.39GB of the available bandwidth from the slot. This means that you can expect ~40% performance reduction when using this card in the PCIe 3.0 x16 slot instead of using PCIe 4.0.

Not all PCIe expansion cards require the full PCIe bandwidth of their physical interface, however. For example, the latest NVIDIA RTX 3080 PCIe 4.0 gaming GPU should work fine in PCIe 3.0 slot and not get a significant performance hit because, in gaming, the graphics processing is done on the GPU itself and the textures are stored in VRAM so, in most cases, the PCIe bus will be underutilised.

If you are unsure about the compatibility of your PCIe devices and are not certain of what solution would work best in your business, we are here to help. Boston Labs is all about enabling our customers to make informed decisions helping enable them to select the right hardware, software, and overall solution for their specific challenges. Please do get in contact with our sales team who will be able to recommend a solution and enable you with a test drive of the latest technologies from our close partners at NVIDIA, Intel, AMD, Mellanox and many more - we look forward to hearing from you all.

Written by:

Tom Michalski

Senior Field Applications Engineer