NVIDIA today announced in their keynote a number of new products that will see a new generation of GPUs enter the market alongside the exiting line-up of DGX Station, DGX-1 and DGX-2.

The news is a welcome one amid the ongoing COVID-19 lockdown during which GTC San Jose was cancelled earlier this year. These announcements mark the latest in a wave of innovation and expansion powered by NVIDIA, after the purchase of Mellanox Technologies last year and Cumulus announced just this week.

The NVIDIA A100 GPU delivers unparallelled acceleration at every scale for AI, data analytics, and high-performance computing (HPC) to tackle the world’s toughest computing challenges! The A100 is now the the engine of the NVIDIA data center platform; and with A100 you can efficiently scale to thousands of GPUs or, thanks to new Multi-Instance GPU for Elastic GPU Computing (MIG)! The A100 GPU offers 7x higher throughput than V100 and with simultaneous instances per GPU, you have the flexibility to run GPUs independently or as a whole.

"MIG will have a profound impact on how we will run datacentres" said Huang.

Ampere is not only incredibly fast for training and inference, but it can fractionlise and partition itself into a large GPU for scale-up applications, or a whole bunch of smaller GPUs to maximize scale out. Whether it's for inference or public clouds you now have the ability to have one datacentre architecture for acceleration available on NVIDIA DGX A100 and HGX A100 platforms.

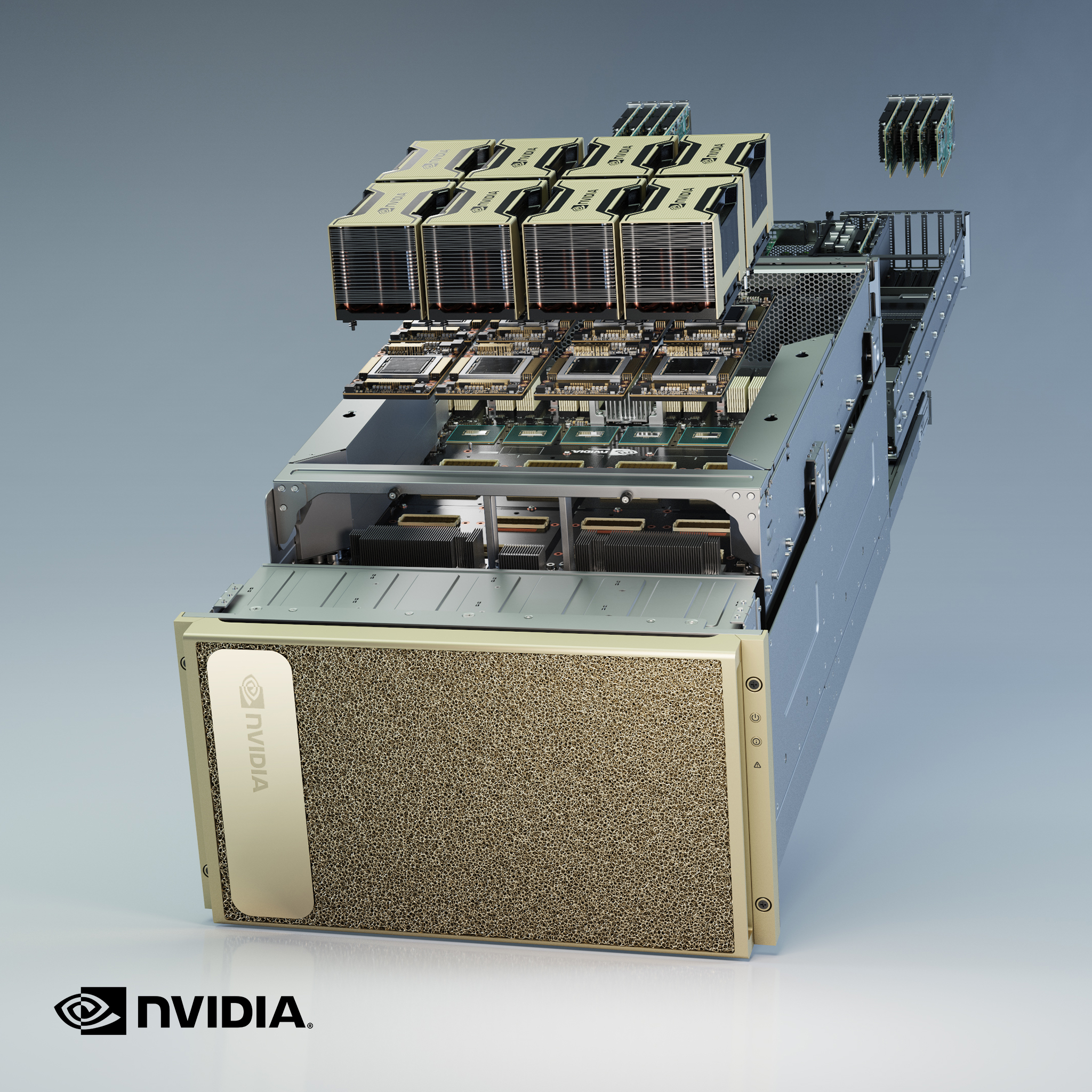

NVIDIA DGX A100 is the universal system for all AI workloads. It integrates eight of the world’s most advanced NVIDIA A100 Tensor Core GPUs, delivering the very first 5 petaFLOPS AI system. Now enterprises can create a complete workflow from data preparation and analytics to training and inference using one easy-to-deploy AI infrastructure.

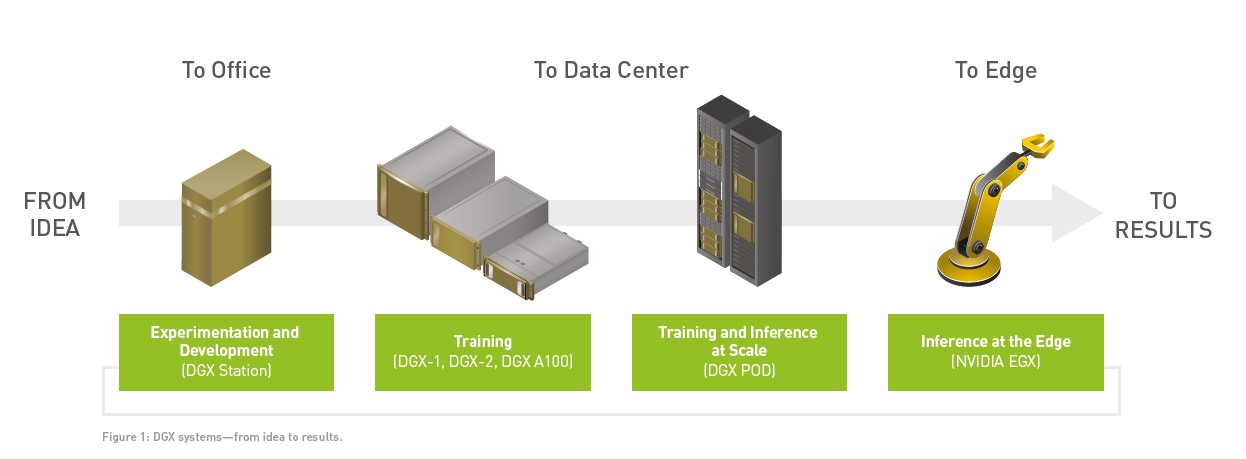

NVIDIA see the DGX A100 fitting into the existing line up of DGX systems and the wider NVIDIA product catalogue from the Idea to the office, datacentre, edge and results. Combined with innovative GPU-optimized software and simplified management tools, these fully-integrated systems are designed to give data scientists the most powerful tools for AI development—from the office to the data center. Experiment sooner, train the largest models faster, and get insights from data—starting from day one.

End-to-end AI development productivity and performance is enabled by the NVIDIA DGX software stack powering each DGX system. This full-stack suite of pre-optimized AI software includes a DGX optimized OS, drivers, libraries, and containers, and access to NGC for additional assets like pre-trained models, model scripts, and industry solutions.The simplified deployment, revolutionary performance, and enterprise-grade quality of DGX systems insulate enterprises from open-source churn, delivering effortless productivity for data scientists and developers. Unlike off-the-shelf commodity hardware, DGX systems incorporate ongoing software stack innovation, available in containerized versions for DGX customers. This ensures continual performance improvement over time, representing a savings of hundreds of thousands of dollars in software engineering OpEx.

“NVIDIA optimized software allowed us to do more. We saw 1.5X faster training on DGX-optimized TensorFlow. Compared with 1,680 images per second on our home-grown 'optimized' TensorFlow software stack, we were seeing 2,600 images per second on the NVIDIA DGX-optimized stack, using ResNet50. Two years later, with the latest software optimizations from NVIDIA, we saw 4X additional improvement in performance on the same hardware. Impressive work!”– Global Stock Photography Company

Effortless Productivity—From Prototype to Production

Your developers need a fast start with easy access to powerful compute that just works, without being tethered to infrastructure. Start quickly, experimenting and developing on DGX Station, a server-class system for your data science teams that doesn't require a data center. Train models on DGX-1, DGX-2, and DGX A100 when you need the fastest time-to-solution. Train AI at-scale leveraging a turnkey solution with DGX POD. As your AI development journey progresses, each of these solutions enable the effortless mobility of your most important work from one system to the next, without changing any code along the way, so that you can right-size resources for the task at hand.

NVIDIA DGX A100 unifies all of these AI workloads into a consolidated system with optimized software that is the foundational building block for AI infrastructure. DGX A100 further lowers TCO not only by offering the highest performance, but also from improved infrastructure utilization with the flexibility to handle multiple, parallel workloads by multiple users.

DOWNLOAD NVIDIA DGX SYSTEMS FAMILY DATASHEET

Boston are a proud partner of Supermicro who have also today announced three servers featuirng NVIDIA A100 GPUs, priding themselves once again as fastest-to-market and highest-performance server manufacturers. The three servers offer choices for those looking to run AI compute, model training, deep learning and High Performance Computing workloads in a three different form factors. The expected 4U HGX A100 (SYS-420GP-TNAR) features 8x SXM4 A100 GPUs and offers Titanium-level efficiency whilst the AS -4124GS-TNR also offering 8x GPUs but in PCIe 4.0 form factor, but the most interesting of the three servers is a compact, yet high-density 2U 4x A100 SXM4 server that takes adventage of PCIe 4.0 thanks to dual AMD EPYC™ 7002 Series CPUs - this really is a benchmark busting solution that gives those who aren't ready to scale to the 8x GPU solution that the DGX A100 and HGX A100 versions offer.

FIND OUT MORE ABOUT THE SUPERMICRO HGX A100 SERVERS